- 21 Posts

- 1.02K Comments

That looks like a DDoS, for instance that doesn’t ever happen on my ISP as they have some kind of DDoS protection running akin to what we would see on a decent cloud provider. Not sure of what tech they’re using, but there’s for certainly some kind of rate limiting there.

- Isolate the server from your main network as much as possible. If possible have then on a different public IP either using a VLAN or better yet with an entire physical network just for that - avoids VLAN hopping attacks and DDoS attacks to the server that will also take your internet down;

In my case I can simply have a bridged setup where my Internet router get’s one public IP and the exposed services get another / different public IP. If there’s ever a DDoS, the server might be hammered with request and go down but unless they exhaust my full bandwidth my home network won’t be affected.

Another advantage of having a bridged setup with multiple IPs is that when there’s a DDoS/bruteforce then your router won’t have to process all the requests coming in, they’ll get dispatched directly to your server without wasting your router’s CPU.

As we can see this thing about exposing IPs depends on very specific implementation detail of your ISP or your setup so… it may or may not be dangerous.

Oh well, If you think you’re good with Docker go ahead use it, it does work but has its own dark side…

cause its like a micro Linux you can reliably bring up and take down on demand

If that’s what you’re looking for maybe a look Incus/LXD/LXC or systemd-nspawn will be interesting for you.

I hope the rest can help you have a more secure setup. :)

Another thing that you can consider is: instead of exposing your services directly to the internet use a VPS a tunnel / reverse proxy for your local services. This way only the VPS IP will be exposed to the public (and will be a static and stable IP) and nobody can access the services directly.

client —> VPS —> local server

The TL;DR is installing a Wireguard “server” on the VPS and then have your local server connect to it. Then set something like nginx on the VPS to accept traffic on port 80/443 and forward to whatever you’ve running on the home server through the tunnel.

I personally don’t think there’s much risk with exposing your home IP as part of your self hosting but some people do. It also depends on what protection your ISP may offer and how likely do you think a DDoS attack is. If you ISP provides you with a dynamic IP it may not even matter as a simple router reboot should give you a new IP.

It depends on what you’re self-hosting and If you want / need it exposed to the Internet or not. When it comes to software the hype is currently setup a minimal Linux box (old computer, NAS, Raspberry Pi) and then install everything using Docker containers. I don’t like this Docker trend because it 1) leads you towards a dependence on property repositories and 2) robs you from the experience of learning Linux (more here) but I it does lower the bar to newcomers and let’s you setup something really fast. In my opinion you should be very skeptical about everything that is “sold to the masses”, just go with a simple Debian system (command line only) SSH into it and install what you really need, take your time to learn Linux and whatnot.

Strictly speaking about security: if we’re talking about LAN only things are easy and you don’t have much to worry about as everything will be inside your network thus protected by your router’s NAT/Firewall.

For internet facing services your basic requirements are:

- Some kind of domain / subdomain payed or free;

- Preferably Home ISP that has provides public IP addresses - no CGNAT BS;

- Ideally a static IP at home, but you can do just fine with a dynamic DNS service such as https://freedns.afraid.org/.

Quick setup guide and checklist:

- Create your subdomain for the dynamic DNS service https://freedns.afraid.org/ and install the daemon on the server - will update your domain with your dynamic IP when it changes;

- List what ports you need remote access to;

- Isolate the server from your main network as much as possible. If possible have then on a different public IP either using a VLAN or better yet with an entire physical network just for that - avoids VLAN hopping attacks and DDoS attacks to the server that will also take your internet down;

- If you’re using VLANs then configure your switch properly. Decent switches allows you to restrict the WebUI to a certain VLAN / physical port - this will make sure if your server is hacked they won’t be able to access the Switch’s UI and reconfigure their own port to access the entire network. Note that cheap TP-Link switches usually don’t have a way to specify this;

- Configure your ISP router to assign a static local IP to the server and port forward what’s supposed to be exposed to the internet to the server;

- Only expose required services (nginx, game server, program x) to the Internet us. Everything else such as SSH, configuration interfaces and whatnot can be moved to another private network and/or a WireGuard VPN you can connect to when you want to manage the server;

- Use custom ports with 5 digits for everything - something like 23901 (up to 65535) to make your service(s) harder to find;

- Disable IPv6? Might be easier than dealing with a dual stack firewall and/or other complexities;

- Use nftables / iptables / another firewall and set it to drop everything but those ports you need for services and management VPN access to work - 10 minute guide;

- Configure nftables to only allow traffic coming from public IP addresses (IPs outside your home network IP / VPN range) to the Wireguard or required services port - this will protect your server if by some mistake the router starts forwarding more traffic from the internet to the server than it should;

- Configure nftables to restrict what countries are allowed to access your server. Most likely you only need to allow incoming connections from your country and more details here.

Realistically speaking if you’re doing this just for a few friends why not require them to access the server through WireGuard VPN? This will reduce the risk a LOT and won’t probably impact the performance. Here a decent setup guide and you might use this GUI to add/remove clients easily.

Don’t be afraid to expose the Wireguard port because if someone tried to connect and they don’t authenticate with the right key the server will silently drop the packets.

Now if your ISP doesn’t provide you with a public IP / port forwarding abilities you may want to read this in order to find why you should avoid Cloudflare tunnels and how to setup and alternative / more private solution.

Is there a use case for CrowdStrike on any platform? No, there isn’t. Anything that messes with the kernel at that level should be considered a security threat on the basis of potential service disruption / threat to business continuity. Do you really want to run a closed source piece of malware as a kernel module?

They completely fuck over their customers in the business continuity aspect, they become the problem and I bet that most companies would never suffer any catastrophic failure this bad if they didn’t run their software at all. No hacker would be able to take down so many systems so fast and so hard.

While I don’t totally disagree with you, this has mostly nothing to do with Windows and everything to do with a piece of corporate spyware garbage that some IT Manager decided to install. If tools like that existed for Linux, doing what they do to to the OS, trust me, we would be seeing kernel panics as well.

After some time, the domain fully expired and GoDaddy decided to buy it as soon as it did,

Oh yeah, that’s what happens when you pick scammy domain registrars. It is very possible that Epik auctioned your domain (after wall they kept it after the expiry date and payed fees) and then GoDaddy snatched it. This is what usually happens.

SMTP with good delivery and whatnot is entirely possible it just takes an IP with a good reputation and enough patience to read and understand the ISPmail guide and a few other details. Running a CA is a security vulnerability and a major pain if you plan to deploy it to the devices of your entire family.

I like the web UI as well, but since i use an iPhone i wasn’t really able to be able to set up the browser with the cert

One thing you can do (that I have in the corporate) is to setup a reverse proxy in front of the WebUI and have it manage user authentication. Essentially nginx authenticates users against the company Keycloak IdP that provides SSO and whatnot. You can do with a simple HTTP basic auth or some simpler solution like phpAuthRequest.

- https://community.openhab.org/t/using-nginx-reverse-proxy-authentication-and-https/14542

- https://docs.nginx.com/nginx/admin-guide/security-controls/securing-http-traffic-upstream/

- https://www.ibm.com/docs/en/order-management?topic=platform-configuring-reverse-proxy-nginx (not for Incus but the nginx config is similar)

- https://github.com/kendokan/phpAuthRequest

thanks again for the recommendation.

You’re welcome, enjoy.

While I agree with you, an attacker may not need to go to such lengths in order to get the PK. The admin might misplace it or have a backup somewhere in plain text. People aren’t also prone to look to logs and it might be too late when they actually noticed that the CA was compromised.

Managing an entire CA safely and deploying certificates > complex; Getting let’s encrypt certificates using DNS challenges > easy;

Just be aware of the risks involved with running your own CA.

You’re adding a root certificate to your systems that will effectively accept any certificate issued with your CA’s key. If your PK gets stolen somehow and you don’t notice it, someone might be issuing certificates that are valid for those machines. Also real CA’s also have ways to revoke certificates that are checked by browsers (OCSP and CRLs), they may employ other techniques such as cross signing and chains of trust. All those make it so a compromised certificate is revoked and not trusted by anyone after the fact.

For what’s worth, LetsEncrypt with DNS-01 challenge is way easier to deploy and maintain in your internal hosts than adding a CA and dealing with all the devices that might not like custom CAs. Also more secure.

Just be aware of the risks involved with running your own CA.

Yes, LetsEncrypt with DNS-01 challenge is the easiest way to go. Be it a single wildcard for all hosts or not.

Running a CA is cool however, just be aware of the risks involved with running your own CA.

You’re adding a root certificate to your systems that will effectively accept any certificate issued with your CA’s key. If your PK gets stolen somehow and you don’t notice it, someone might be issuing certificates that are valid for those machines. Also real CA’s also have ways to revoke certificates that are checked by browsers (OCSP and CRLs), they may employ other techniques such as cross signing and chains of trust. All those make it so a compromised certificate is revoked and not trusted by anyone after the fact.

If you know your way around Linux you most likely don’t need Proxmox and its pseudo-open-source… you can try Incus / LXD instead.

Avoid Proxmox and safe yourself a LOT of headaches down the line. Go with Debian 12 + Incus/LXC, it runs VMs and containers very well. Proxmox ships with an old kernel that is so mangled and twisted that they shouldn’t even be calling it a Linux kernel. Also their management daemons and other internal shenanigans will delay your boot and crash your systems under certain circumstances.

LXD/Incus provides a management and automation layer that really makes things work smoothly - essentially what Proxmox does but properly done. With Incus you can create clusters, download, manage and create OS images, run backups and restores, bootstrap things with cloud-init, move containers and VMs between servers (even live sometimes).

Another big advantage is the fact that it provides a unified experience to deal with both containers and VMs, no need to learn two different tools / APIs as the same commands and options will be used to manage both. Even profiles defining storage, network resources and other policies can be shared and applied across both containers and VMs.

I draw your attention to containers (not docker), LXC containers because for most people full virtualization isn’t even required. In a small homelab if you can have containers that behave like full operating systems (minus the kernel) including persistence, VMs might not be required. Either way LXD/Incus will allow for both and you can easily mix and match and use what you require for each use case. Hell, you can even run Docker inside an LXC container.

For eg. I virtualize the official HomeAssistant image with Incus because we all know how hard is to get that thing running, however my NAS / Samba shares are just a LXD Debian 12 container with Samba4, Nginx and FileBrowser. Same goes for torrent client that has its own container. Some other service I’ve exposed to the internet also runs a full VM for isolation.

Like Proxmox, LXD/Incus isn’t about replacing existing virtualization techniques such as QEMU, KVM and libvirt, it is about augmenting them so they become easier to manage at scale and overall more efficient. I can guarantee you that most people running Proxmox today it today will eventually move to Incus and never look back. It woks way better, true open-source, no bugs, no delayed security updates, no BS licenses and way less overhead.

Also, let’s consider something, why use Proxmox when half of it’s technology (the container part) was made by the same people who made LXD/Incus? I mean Incus is free, well funded and can be installed on a clean Debian system with way less overhead and also delivers both containers and VMs.

Yes, there’s an optional WebUI for it as well!

- Context on Incus vs LXD: https://www.theregister.com/2023/08/04/incus_lxd_fork/

- A sum of my experience with Proxmox over the years: https://lemmy.world/comment/7476411

Some documentation for you:

English

English- •

- lemmy.world

- •

- 1M

- •

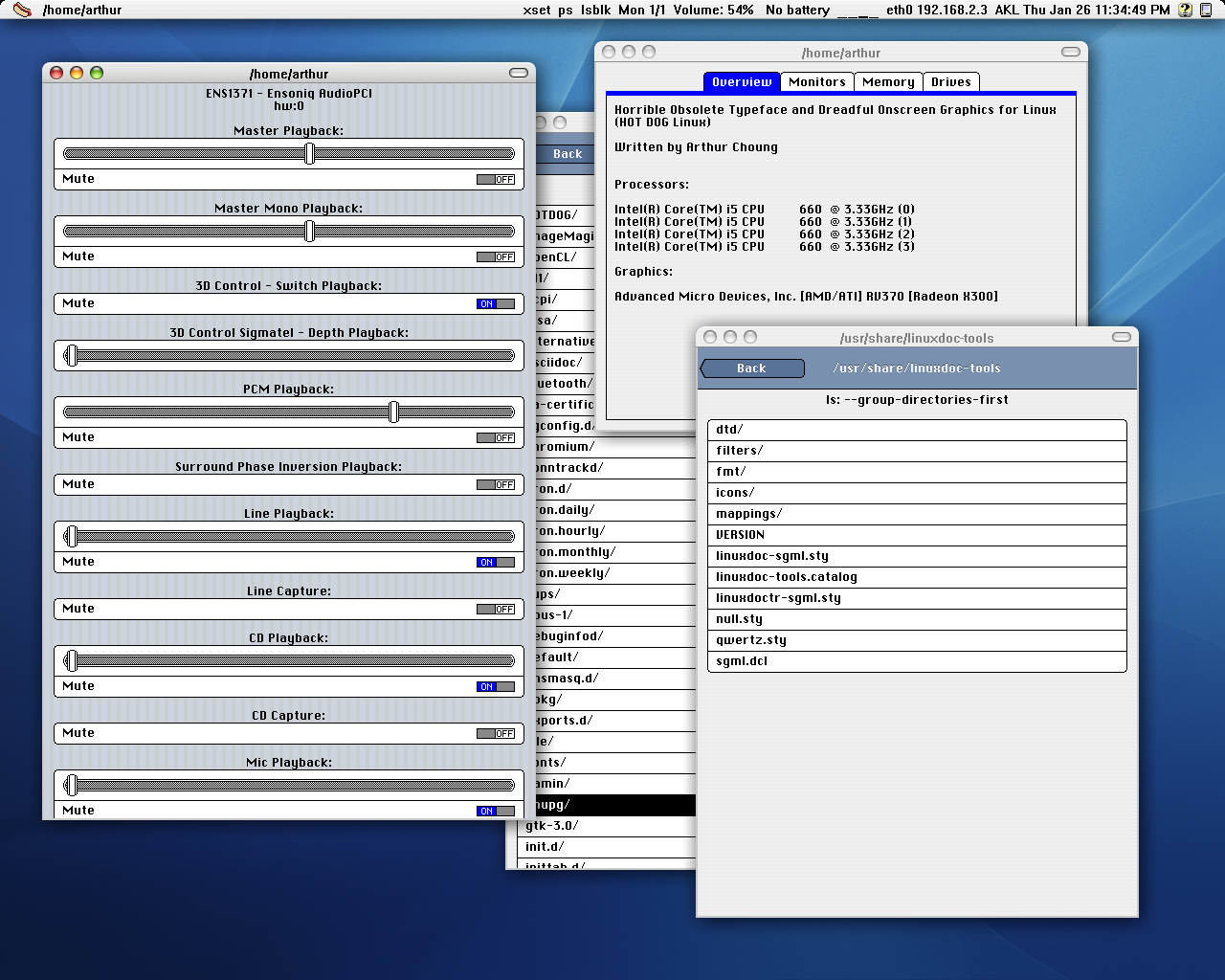

You will never get the same font rendering on Linux as on Windows as Windows font rendering (ClearType) is very strange, complicated and covered by patents.

Font rendering is also kind of a subjective thing. To anyone who is used macOS, windows font rendering looks wrong as well. Apple’s font rendering renders fonts much closer to how they would look printed out. Windows tries to increase readability by reducing blurriness and aligning everything perfectly with pixels, but it does this at the expense of accuracy.

Linux’s font rendering tends to be a bit behind, but is likely to be more similar to macOS than to Windows rendering as time goes forward. The fonts themselves are often made available by Microsoft for using on different systems, it’s just the rendering that is different.

For me, on my screens just by installing Segoe UI and tweaking the hinting / antialiasing under GNOME settings makes it really close to what Windows delivers. The default Ubuntu font, Cantarell and Sans don’t seem to be very good fonts for a great rendering experience.

The following links may be of interest to you:

but never really thought to use it in my home network

Because you don’t need it. OPNsense and pfSense may make sense in some cases however you’re running a small network and you most likely don’t require those. OpenWRT will provide you with a much cleaner open-source experience and also allow for all the customization you would like. Another great advantage of OpenWRT you’ve the ability to install 3rd party stuff in your router, you may even use qemu to virtualize stuff like your Pi-Hole on it or simply run docker containers.

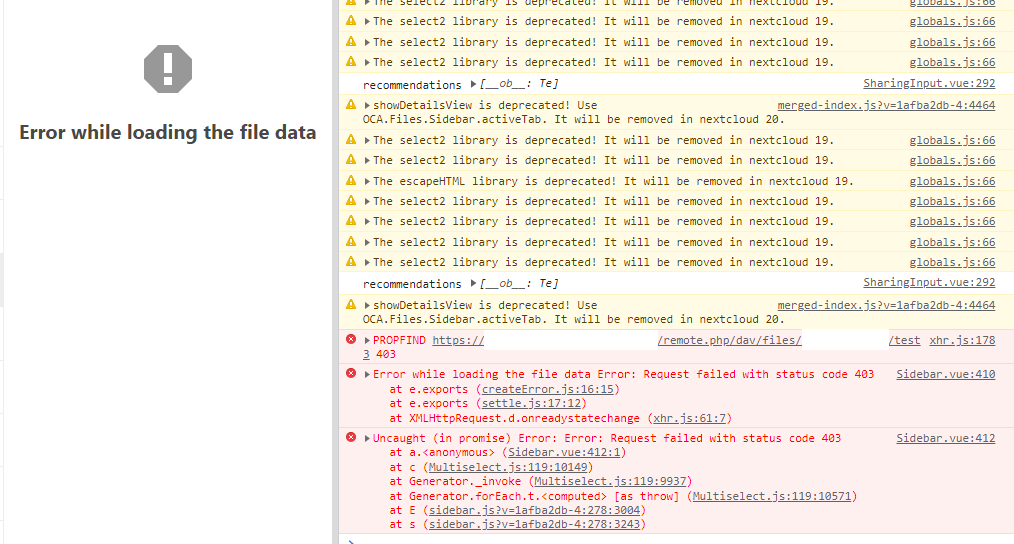

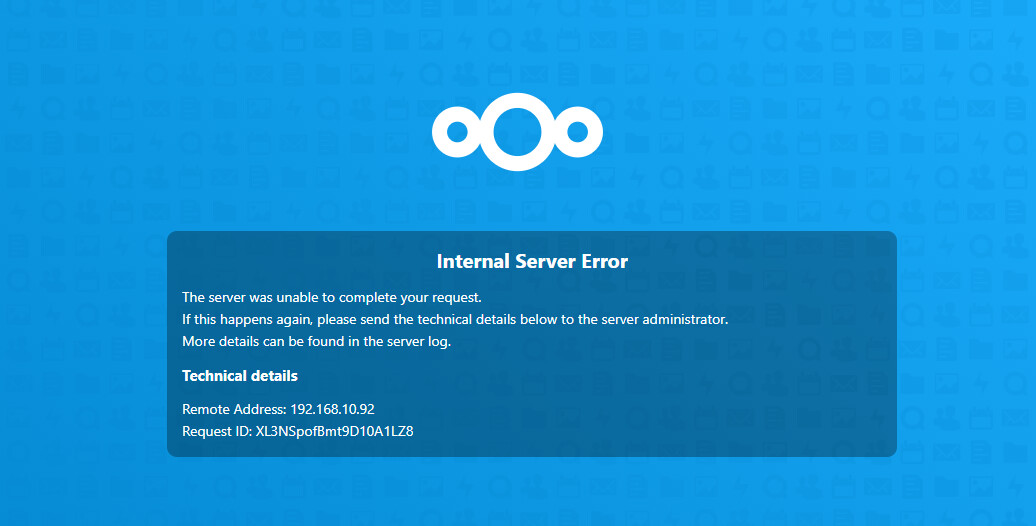

The point is that every single feature they try to add to it ends up as yet another buggy thing that never gets fixed. They should focus on making the core things works decently instead of adding new features. After all this time they didn’t get the sync to be as reliable as Syncthing, why would they venture into webmail’s and whatnot ?

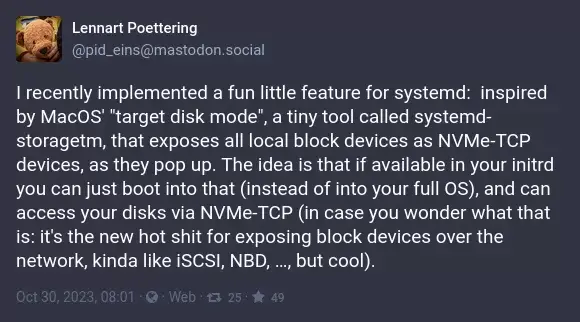

Well… Poettering will eventually work his way up to browser engines and then we’ll get something efficient… Here’s the announcement:

"There’s a new component in systemd, called “engined”. Or actually, it’s not a new component, it’s actually the long existing “WebKit” engine now done properly. The engine is also a lot more fun to use than “WebKit” or “Blink” because you can finally have hundreds of tabs open in your browser without running out of RAM.

Coming soon in Coming for systemd 981.

English

English- •

- domainnamewire.com

- •

- 4M

- •

English

English- •

- 4M

- •

English

English- •

- 6M

- •

English

English- •

- github.com

- •

- 6M

- •

English

English- •

- github.com

- •

- 6M

- •

English

English- •

- lemmy.world

- •

- 8M

- •

English

English- •

- www.cnx-software.com

- •

- 8M

- •

English

English- •

- lemmy.world

- •

- 9M

- •

English

English- •

- 9M

- •

English

English- •

- tadeubento.com

- •

- 9M

- •

English

English- •

- serverfault.com

- •

- 1Y

- •

English

English- •

- cdn.tcb13.com

- •

- 1Y

- •

English

English- •

- www.brendangregg.com

- •

- 1Y

- •

English

English- •

- tadeubento.com

- •

- 1Y

- •

English

English- •

- tadeubento.com

- •

- 1Y

- •

English

English- •

- joshmadison.com

- •

- 1Y

- •

English

English- •

- 1Y

- •

English

English- •

- 1Y

- •

They forgot the part where margins should be included on things… once again.